Plos

Scientists have built a computer model that shows how bees use vision to detect the movement of the world around them and avoid crashing. This research, published in PLOS Computational Biology, is an important step in understanding how the bee brain processes the visual world and will aid the development of robotics.

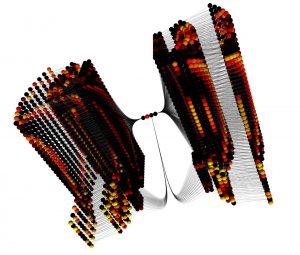

The study led by Alexander Cope and his coauthors at the University of Sheffield shows how bees estimate the speed of motion, or optic flow, of the visual world around them and use this to control their flight. The model is based on Honeybees as they are excellent navigators and explorers, and use vision extensively in these tasks, despite having a brain of only one million neurons (in comparison to the human brain’s 100 billion).

The model shows how bees are capable of navigating complex environments by using a simple extension to the known neural circuits, within the environment of a virtual world. The model then reproduces the detailed behaviour of real bees by using optic flow to fly down a corridor, and also matches up with how their neurons respond.

This research ultimately shows that how a bee moves determines what features in the world it sees. This explains why bees are confused by windows – since they are transparent they generate hardly any optic flow as bees approach them.

Understanding how bees avoid walls, and what information they can use to navigate, moves us closer to the development of efficient algorithms for navigation and routing – which would greatly enhance the performance of autonomous flying robots.